Despite fake images of Taylor Swift and her fans supporting former President Donald Trump, 2024 is not shaping up to be the AI election that was feared. But there’s still time.

The recent artificial intelligence boom led to stark warnings that 2024 would be the “AI election” – people wouldn’t know what’s real and what isn’t, and misinformation would run rampant with the technology that makes it easier to create and faster to target victims.

“Coming into 2024, people were dubbing this the AI election, and we were anticipating comprehensive and widespread uses of AI-generated content to either mislead voters or promote inaccurate information about candidates,” says Rachel Orey, the director of the Bipartisan Policy Center’s elections project. “There have been certain instances of that happening, but it has been far from the groundswell that we were initially anticipating.”

“This is not going to be the AI election. People were talking about it as though AI is going to swamp everything, and it was clearly a little too soon,” says Dave Karpf, an associate professor at the George Washington University School of Media and Public Affairs. “AI might define 2028, but it’s not the AI election.”

That’s not to say that AI isn’t present in the presidential election. It surely is.

Just this week, former President Donald Trump got in hot water for sharing AI-generated images of Taylor Swift and her fans wearing “Swifties for Trump” shirts. One image showed Swift dressed as Uncle Sam captioned, “Taylor wants you to vote for Donald Trump.”

Trump shared the images with the caption, “I accept!” on his Truth Social platform, implying that Swift had endorsed him. Swift has not endorsed a candidate for president this election, but she endorsed President Joe Biden in the 2020 election.

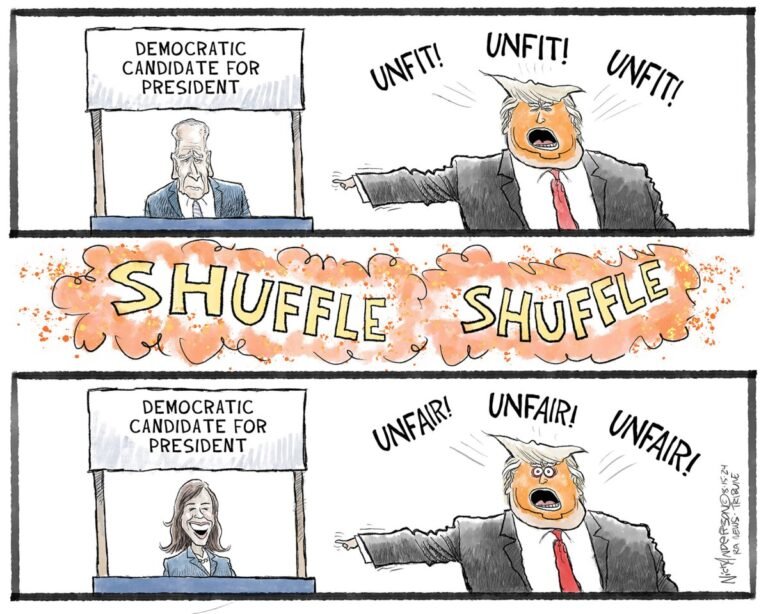

And it’s not the first time Trump has shared AI-generated content. He recently posted a deepfake video of him and Elon Musk dancing and an AI-generated image of Vice President Kamala Harris holding a communist rally at the Democratic National Convention.

Ironically, Trump, who is notoriously sensitive about crowd sizes, recently claimed that the Harris campaign used AI to lie about a crowd attending a campaign event in Detroit.

Trump posted: “There was nobody at the plane, and she ‘A.I.’d’ it, and showed a massive ‘crowd’ of so-called followers, BUT THEY DIDN’T EXIST!”

The image, however, was real. But the exchange serves as an example of one of the downsides to AI in elections: facilitating voter distrust.

“There’s a sense that artificial intelligence has introduced a new kind of fakery and new kind of reality to the civic information environment, and people didn’t even trust their instincts,” says Lee Rainie, the director of Elon University’s Imagining the Digital Future Center.

The lack of trust could lead to declining civic engagement and altered perceptions of election legitimacy, according to Orey.

“AI has these unintended consequences, one of them being that voters might not trust anything that they see,” Orey says.

But the risk that 2024 could become the AI election isn’t fully gone. More than 70 days remain until Election Day.

“While AI hasn’t upended the 2024 election season, the risk isn’t over,” Orey says. “If anything, it might be smart for malign, foreign or domestic actors to let concern dissipate in the lead-up to the election and then spread false AI-generated content once voting begins.”

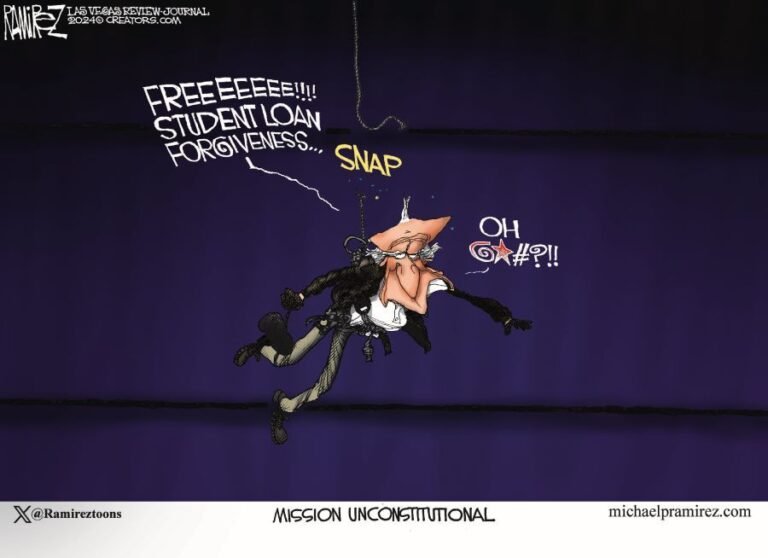

Before the New Hampshire primary in January, an AI-generated robocall that mimicked Biden’s voice told listeners that “your vote makes a difference in November, not this Tuesday” to suppress voter turnout.

The telecommunications company that transmitted the calls will pay a $1 million fine, the Federal Communications Commission announced Wednesday.

“Voter intimidation, whether carried out in person or by way of deepfake robocalls, online disinformation campaigns, or other AI-fueled tactics, can stand as a real barrier for voters seeking to exercise their voice in our democracy,” Assistant Attorney General Kristen Clarke of the Justice Department’s Civil Rights Division said in a statement. “Every voter has the fundamental right to cast their ballot free from unlawful intimidation, coercion and disinformation schemes.”

The FCC earlier this month proposed its first AI-generated robocall and Robotech rules, which would require callers to disclose their use of AI-generated calls and text messages. But the regulatory process could take years, and voters should be mindful of the possible strategies now.

“Once voting begins, the potential for the impact of false information, whether it’s about candidates themselves or about the time, place or manner of voting, increases,” Orey says.

Leave a Reply